Onion Ring Plot Twist: Can We Trust Nutritional Epidemiology?

What if someone told you that to live a long and healthy life you should eat onion rings and avoid salmon?

What if someone told you that to live a long and healthy life you should eat onion rings and avoid salmon?

Well, you should rightly be skeptical. And, if you’re not, I’m assuming you have a love of onion rings and strong confirmation bias.

So why ask such a ridiculous question?

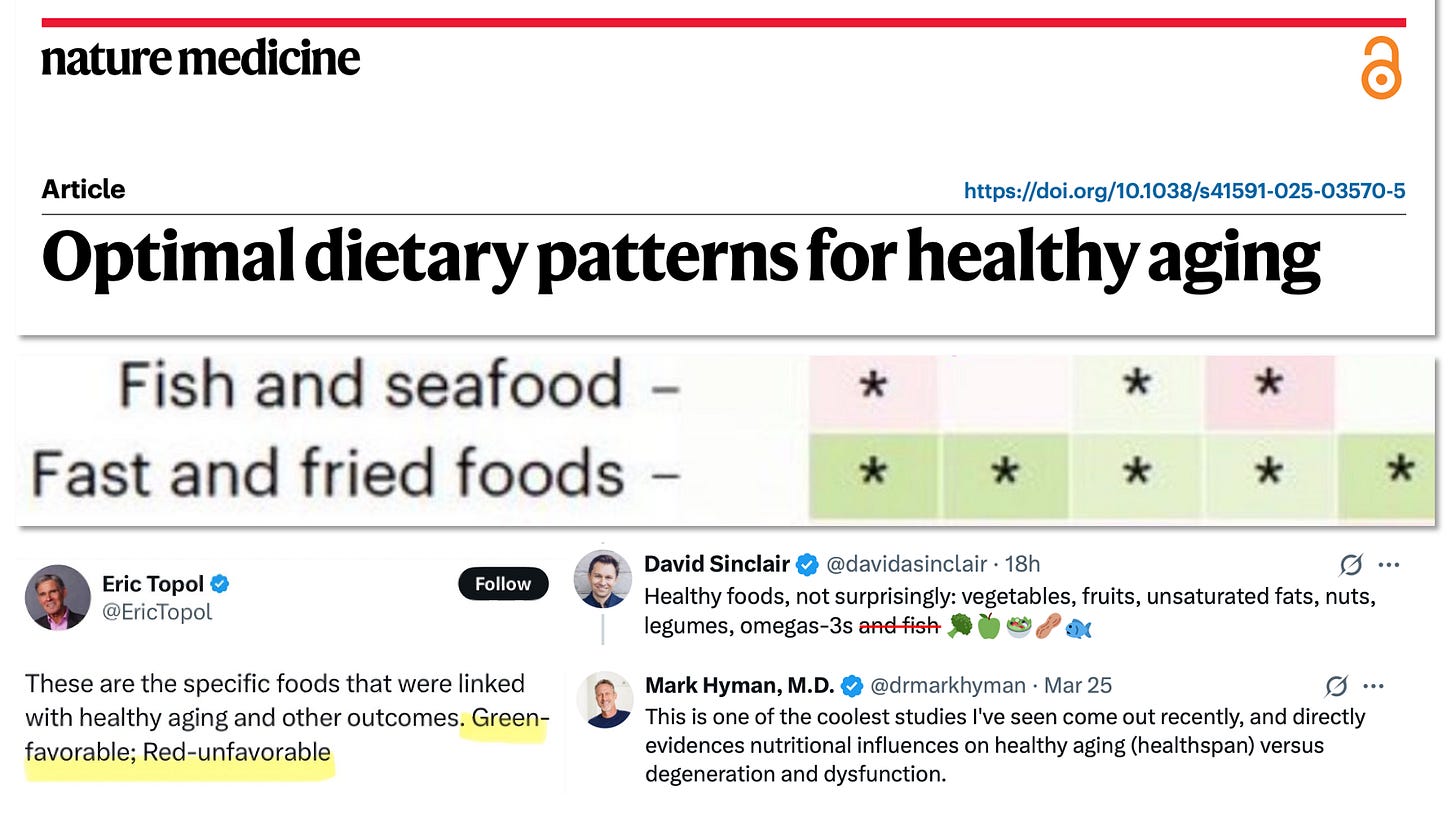

Well, there have been a flock of scientists, doctors and influencers tweeting about a big new study published in Nature Medicine titled “Optimal Dietary Patterns for Healthy Aging.”

It was a a large nutritional epidemiological study where the researchers took responses from Food Frequency Questionnaires administered to 105,015 participants of the Nurses’ Health Study and the Health Professionals Follow-Up Study and looked for associations between dietary patterns and “healthy aging,” as defined by measures of cognitive, physical and mental health, and whether participants reached 70 years of age free of chronic disease.

Normally, I’d dive deep into the methodology with you… But I’m not going to do that today. Instead, I want to focus on a single “quirk” in the data.

In Figure 4, clear as crystal, the data suggest eating Fast and fried Food is positively associated with healthy aging and that eating Fish and seafood is negatively associated with healthy aging.

In this figure “Green = Good” and “Red = Bad” across 6 domains, left to right: Healthy aging, Intact cognitive function, Intact physical function, Intact mental health, Free from chronic disease, Survived to 70 years of age.

Over the past few days, I’ve tried to point this out to some of the big names in the medicine, health and fitness space but have received no response to date. (I’ll update this post with their responses if/when I receive any and if I’m granted permission to share).

I will also note, this wasn’t a print error in the table, i.e. they didn’t mistakenly flip-flop the rows. The paper’s discussion section acknowledges: “In the current study… fast and fried foods… [w]ere positively associated with surviving to the age of 70 years…”

They provide one explanation being the “social aspect” related to eating away from home. Again, I’m skeptical. Are you?

They also do not comment on why fish intake is associated negatively with healthy aging along some domains.

What Does This Mean?

My aim here is not to try to discredit and disregard this study outright. Certainly, many people have strong opinions about the value (or lack thereof) of nutritional epidemiology. I personally think it can have value, when properly contextualized, caveated and used (primarily) for hypothesis generation.

However, I do think this “oddity” should make people ask themselves whether these epidemiological/associational data should be used to guide how we should eat. Currently, we depend a lot on these types of studies to derive nutritional recommendations. And if the data can suggest onion rings are better for healthy aging than fish… well… either we question the results—or start serving onion rings at longevity clinics. Sorry, salmon. It’s not personal.

I like to keep an open mind and—as I always say, “#StayCurious”—but sometimes we need to apply common sense first.

If this newsletter hit home with you, you may enjoy these past videos on my YouTube Channel about the Food System, Common Sense, Healthy Aging, and the worst “Science” I’ve ever seen.

This study definitely lox credibility, seems fishy to me, and doesn't have a ring of truth about it.

These studies have two major flaws:

1. can you trust the questionnaire? Just imagine, when study is done for a certain substance, that substance is measured in accredited labs, lots and lots of standards are applied, accreditation measures checked. And here? What scrutiny was applied to the study participants? To be honest, you are lucky that they participate with honesty at their side. But honesty and accuracy can still be miles away.

2. confounding variables: in this study, it was the medical community, so even if the conclusion is true, it is valid only for that community. But this niche population in the study is just the tip of the iceberg regarding confounding variables in such studies. There are so many confounding variables in such studies, with their noise signals just adding up. And it works both ways: if you take them into account, they can lead to overadjustment, if you do not take them into account ... well,... you did not take them into account! Even a well-conducted study can produce misleading results if overadjustment introduces excessive noise, masks true effects, or amplifies statistical instability. Sensitivity analyses, careful confounder selection, and alternative causal inference methods (e.g., instrumental variables, directed acyclic graphs) can help mitigate these risks, but who does that?

Finally, a small shopping list of issues with confounding variables that make most epidemiological studies laughable (but unfortunately still taken seriously in popular media):

1. Overadjustment - when a study controls for variables that are intermediates in the causal pathway (leading to attenuation or reversal of a true effect) - a setup in which the (intermediate) variable is correlated with the studied hypothetical cause ("exposure" in epidemiology) or effect ("outcome" in epidemiology) but not true confounder.

For example, if an exposure increases both blood pressure and cardiovascular disease (CVD) severity, and the study adjusts for blood pressure when analyzing cardiovascular risk, it may mask or even inverse the exposures' true effect. Thus we could get that salt is preventing CVD (or of course that it causes it), depending on tinkering with confounders.

2. Residual Confounding and Measurement Noise - If confounders are not measured accurately or completely, statistical adjustments may still leave residual confounding. In studies with high variability in confounders, noise can overwhelm the signal, leading to wide confidence intervals, spurious associations, or null results. But for wide confidence intervals, that would at least indicate that there is a problem with the study.

3. Model Instability and Multicollinearity - Including too many correlated confounders can cause statistical instability (e.g., variance inflation, collinearity), making it difficult to isolate the independent effect of the exposure. This can lead to wide confidence intervals, false negatives (Type II error) and incorrect direction of effect estimates

4. Data-Dredging and Multiple Comparisons - When adjusting for many factors, the risk of spurious findings increases, especially if many subgroup analyses are performed. Even with proper statistical corrections, extreme variability in data can amplify false signals and reverse the result.

5. Effect Modification and Heterogeneous Populations - If intervention and control groups differ drastically in confounding factors, standard adjustment methods (e.g., regression, propensity scores) might fail to balance them adequately. Subgroup interactions might distort findings, making generalization difficult.